A bit dramatic title. But yes, EF Core, paired with Linq Contains method negatively impacts your SQL server performance and observability. Let’s dig in.

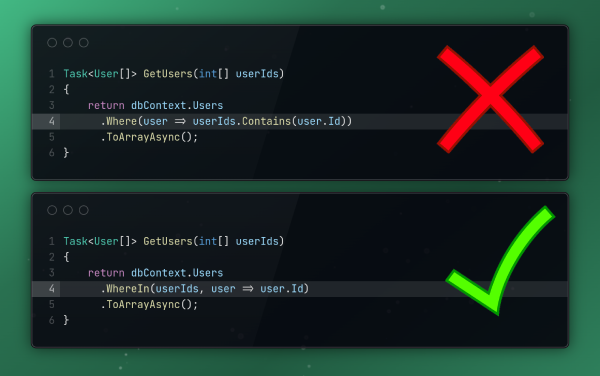

EF Core does not translate Linq Contains method correctly. The query produced is not parameterized. Meaning that for different arguments, different SQL queries are produced.

Consider the following code, where users with IDs 1 and 2 are loaded:

async Task<User[]> LoadUsers()

{

var userIds = new[] { 1, 2 };

return await dbContext

.Users

.Where(x => userIds.Contains(x.Id))

.ToArrayAsync();

}The SQL query this code snippet produces is not parameterized, and has hardcoded values:

SELECT [u].[Id], [u].[FirstName], [u].[LastName]

FROM [Users] AS [u]

WHERE [u].[Id] IN (1, 2)Different arguments and different number of arguments will produce similar, but different queries.

There are 2 reasons why this is bad:

SQL Server cache pollution

Whenever a query needs to be executed, SQL server has to:

- Evaluate the query and produce a new query plan.

- Store the new query plan.

- Potentially evict some other cached query plan. The evicted plan might be frequently used.

- Execute the query based on the plan.

All this unnecessary work incurs a performance penalty.

Observability metrics of the SQL Server are skewed

Consider how the SQL Server cache will look like. Most of the stored queries will be used only once. It is virtually impossible to know the number of times the query above executed, or what was the average or total CPU/IO price for these queries.

How can you optimize what you can’t measure?

What can be done to fix the issue?

At the moment, we can mitigate this, but not fix it completely. The good news is, EF Core team is aware of it, and might produce a solution. There are already a few approaches, although none made it to EF Core 8.

The WhereIn (See it on GitHub) extension method aims to solve this. It is a modified version compared to the one suggested in the GitHub issue linked above. After all, we build on the shoulders of the giants.

The usage is quite similar:

async Task<User[]> LoadUsers()

{

var userIds = new[] { 1, 2 };

return await dbContext.Users

.WhereIn(userIds, x => x.Id)

.ToArrayAsync();

}The query produced is now completely parameterized:

SELECT [u].[Id], [u].[FirstName], [u].[LastName]

FROM [Users] AS [u]

WHERE [u].[Id] = @__v_0 OR [u].[Id] = @__v_1How does this extension work?

There are 3 distinct steps in the method:

Step 1: Create the expression tree

Consider the following code:

var users = await dbContext.Users

.Where(x => x.Id == userIds[0] || x.Id == userIds[1])

.ToListAsync();This code will produce a completely parameterized query with two parameters. We want to achieve the same, without writing the OR statements. Instead, we create an expression tree that is equivalent to the expression inside the Where clause.

The resulting query is now parameterized. But, we still had a new query for each number of elements in the userIds array.

Step 2: Bucketize the values

With the previous step, we now have a parameterized query. However, the number of parameters is dependent on the number of elements passed in the array to the function. If we were to call this with 1000 arrays of different length, we would end up with 1000 different, parameterized queries.

In this step, we try to reduce the number of queries. It can be done by predefining bucket sizes, and then using the last element in the array to fill the bucket to it’s size. The predefined bucket sizes are the power of 2, which means we have buckets with sizes 1, 2, 4, 8..

For example, we want to query for user ids 1, 2 or 3. The bucket this fits into is going to be 4. The expression for this userIds array will be equivalent to:

var users = await dbContext.Users

.Where(x => x.Id == 1 || x.Id == 2 || x.Id == 3 || x.Id == 3)

.ToListAsync();The default bucketizer will create 10 queries for any number of elements in the array up to 1024. It is not perfect, but helps.

Step 3: Fallback to Contains method in case of too many arguments

SQL Server has a limitation of 2047 parameters in the query. Queries with more parameters than this limit will fail. Given that the previous approach, when we used Contains would work (because there are no parameters, only hard-coded values), it is possible to fallback to this approach when the number of arguments is too great.

What is different compared to the original extension method?

Creating the expression tree

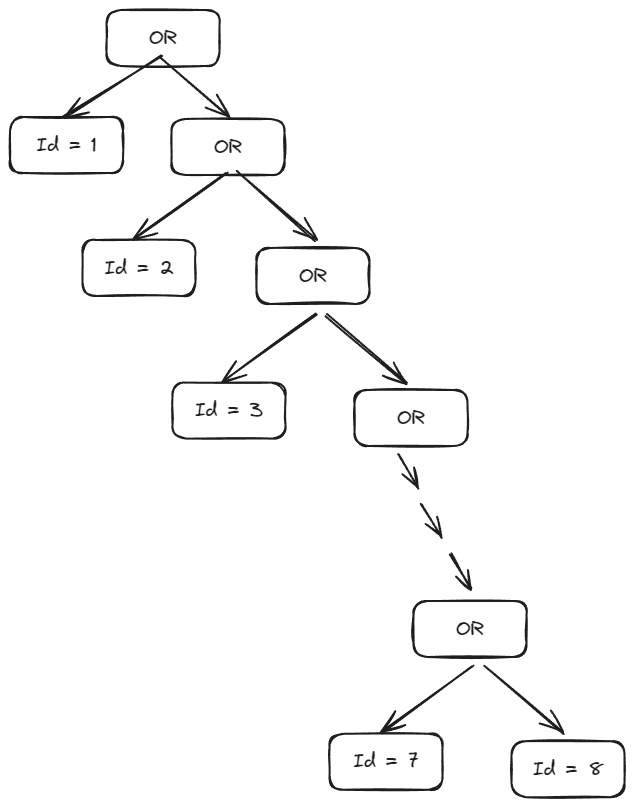

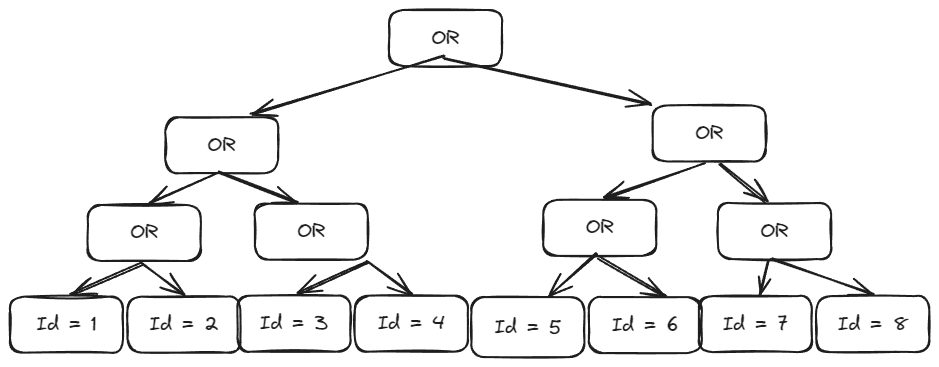

In the original implementation, when the number of elements in the array is greater than 1024, EF Core will fail with exception. The reason is the aggregate function used to generate the expression. The expression tree will look like (for number of elements N = 8):

This three will have N+1 depth, where N is number of elements. Instead, we create create a balanced binary tree, so that the depth is log(N). This means, to reach the same depth as before, you need 1024 ^ 2 elements in the array. The expression tree now looks like this:

This is one of those cases where knowing binary sort and binary trees is useful.

Custom bucketizer support

Usually there are well known predefined bucket sizes you may use in your project. This implementation allows custom bucketizers to be used, to do micro optimizations for these cases.

For example, you might always load 20, 50 and 100 entries from the database. In that case, it would be better to create a custom bucketizer and further reduce the number of generated SQL queries.

Fun facts

As I was writing this post, I checked the original github issue – someone posted another approach to solve the same issue.

Links

The code linked on GitHub is under MIT license.